ChatGPT 4o And Google IO AI war (History , New Models and My Thoughts)

The AI war that will shape the human experience and unfold the future of Human Computer Interaction .

Google vs OpenAI - competition as old as time (not really )

actually not that old but Google did a cute flex on January 28, 2020 1 ,in which they said :

we present Meena, a 2.6 billion parameter end-to-end trained neural conversational model.

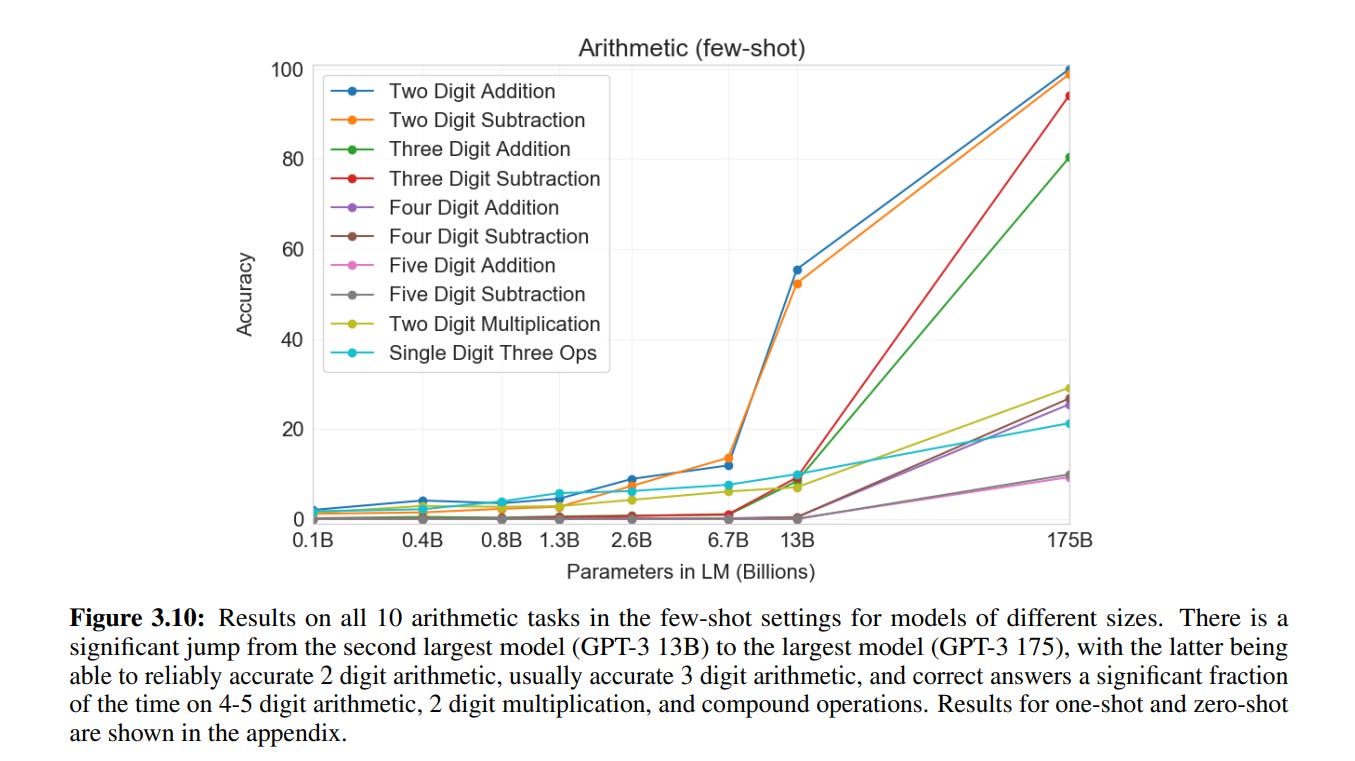

Compared to an existing state-of-the-art generative model, OpenAI GPT-2, Meena has 1.7x greater model capacity and was trained on 8.5x more data.if you are here because you were living under a rock back then and had no idea about on going battle between these two companies then you are late to the party , you might know about these AI chatbots after chatgpt 3.5 release on November 30, 2022 which is more then two years too late .Anyhow Openai clapped back with ChatGPT 3 paper2 They showed the world that maybe scaling these models may help them with comprehension and reasoning . Their paper says :

Specifically, we train GPT-3, an autoregressive language model with 175 billion parameters, 10x more than any previous non-sparse language model, and test its performance in the few-shot setting

They had to show good results and that they are competitive to google because they were low on funding and this is the only chance for them to show other that they have what it takes to change the field of AI once in for all . And with the release of chatgpt 3.5 on November 30, 2022 3 . In some cases desperation leads to just better outcomes and progress . Google meanwhile were using transformers in totally different ways and they were missing out on the chatbot aspect of it .

Open AI’s moment to shine :

Chagpt was overnight success , it reached 100 million active users in 2 months and no one even open ai anticipated that . People were just amazed that it can try to do same things that they were supposed to do

writing ? check translation ?? check , coding ?? also check .That wow factor plus most normies have no idea how the tech behind it works created a holy shit moment for people .

Scaring Google and other tech giants about possible extinction event :

They were the ones to show people the real power of these AI models , and it can change everything and sure thing now everyone was interested all of a sudden to try building their own AI models , speculations were made that open ai will take over every website like stack over flow 4 big tech realized that this might be them if they dont compete with open ai and have systems in place that they can control this will be them

Now i am going to assume if you read this far , you already know what happened with AI , and i wont link more articles about llama , and the ChatGPT 4 being dropped and them Gemini and Gemma models

Open AI not letting any other company take the spotlight

For better or for worse , open AI is being very selective about what they release and when they do it .They specially choose days right after or before big announcements from other companies to always stay in the conversation

but these light hearted jokes , people were taking them very seriously , AI has its own tinfoil hat on - pipeline as well . and AI HYPE YOUTUBE VIDEOS can’t seem to get enough of the drama

Google Deepmind Researcher Sholto Douglas on Darwesh Patel podcast : published on April 10, 2022

Let's start by talking about context lengths. It seemed to be underhyped, given how important it seems to me, that you can just put a million tokens into context. There's apparently some other news that got pushed to the front for some reason, but tell me about how you see the future of long context lengths and what that implies for these models.

Sholto Douglas 00:01:28

So I think it's really underhyped. Until I started working on it, I didn't really appreciate how much of a step-up in intelligence it was for the model to have the onboarding problem basically instantly solved.

You can see that a bit in the perplexity graphs in the paper where just throwing millions of tokens worth of context about a code base allows it to become dramatically better at predicting the next token in a way that you'd normally associate with huge increments in model scale. But you don't need that. All you need is a new context. So underhyped and buried by some other news.

Transcript taken from Darweh’s Substack

May 13th Open AI announcements :

Now lets see where open ai stands right now their new SOTA model named Chatgpt 4o 5 . Which they claim to be better then chatgpt4 turbo and its end to end multi modal , people dont generally know what end to end mean to im about to put my nerd hat on

What even is Multi modality , why end to end is such a big deal :

Multimodal Large Language Models (LLMs) can process and generate multiple types of data simultaneously, such as text, images, sounds and more .This gives them new prespective and context just like it gives us humans more context when using all of our 5 senses when we are making sense of the world around us .

Chatgpt launches its voice mode on September 25, 2023 6 which lets you use your voice to interact with AI but , it was slow really clunky and just not as natural to talk to . You are talking want to think or articulate better its going to process the input any way and you only asked it half question , you cant interupt it and clartify anything

Chatgpt 4o model processes audio natively so it can show and understand emotions , and does not have to rely on slow audio to text transcription and → feed it to LLM → get the Answer back → and then convert it back to speech so if we assume 200 ms latency on each step , ideal conditions (which is very generous ) there are still 4 steps .

Open AI claim in their blog post that

ChatGPT with latencies of 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4) on average. where as new model can It can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time(opens in a new window) in a conversation.

Some Demos and my Thoughts on them

Chat gpt counting slow and fast when prompted to do so

Thoughts about the Demo where they let AI translate in real time back and forth

some thoughts on this demo :

this was probably the coolest demo out of all of them imo , this can enable so many new interactions when you do to new place you dont have to carry a huge learn german in 3 hours book

Since this feature is free you might be able to go some where and interact with locals better , negoitatte better with the shop keeper and so much more

But this is Model can also process video as fast as audio :

In next demos they showed how natively multi model video can be such a big value add for you , it can help you fix something that you have never done before , can help you in so many different ways the possibilities are endless .

Demo where they show the real time video feature with gpt4-o model

This demo really shows what a good AI model can do for you now only it understands the world , because at the heart of transformers they are just fancy statistics and assocications link if you really want to know what kind of math. but when packaged like this really shows what is possible with these models not only it infers what you might be doing based on many cues . Totally WILD right !

AI can it really help you learn or can it teach you really well -IM NOT SURE ?

This is a demo with Sal Khan the founder of Khan Academy , this really made me think about the future of teaching . Gen Next with be so privleged to have these tools at their disposal and this is the worst that this model ever be , you can imagine in future you will be able to learn iteraively with some one ~ Masters in that domain helping you at all times that you can ask any wierd questions that you might not be able to ask in the class

if AI tells you , youve been asking about this for 5 days *You are too dumb*

If AI tells you , I have no idea what you asking about for 5 days *You are too smart*

HOT TAKE ABOUT AI ASSISTED LEARNING :

i personally dont find AI to be that helpful as a teacher , atleast not in the current state ?? like you need everything spoon fed to you?? is it supposed to read you a lullaby?? a big part of learning is to struggling with the subject and being able to teach yourself the subject but i can see a very strong model with search and good RAG , being able to make the information easy for you to consume

if i get enough hate for this take (Feel free to comment anything) i might explain myself with a full post and better examples

May 14th Open AI announcements :

Google Trying to catch up:

Gemini 1.5 pro is now supports 2 Million token of context , which is huge compared to other models , GPT 4 having 128K , so you can simultaneously feed multiple books and large code repositories that gives model more context to work with , in reutrn make the models perform better so far this is working very good for them , and in my tests you can feed them whole libararies and ask about them and the model is not reliant on tools like search or recall

Link i need to change and remove later

https://www.theverge.com/24153841/google-io-2024-ai-gemini-android-chrome-photos

Project Astra :

According to Google → Building on our Gemini models, Project Astra explores the future of AI assistants that can process multimodal information, understand the context you’re in, and respond naturally in conversation 7

in layman's terms , Google is going to offer a model that can see your video feed and answer you what it sees

Project Astra’s Demos -Thoughts :

A really bad UX thing i want to mention in this demo is that they let the user circle or draw on the screen to help AI see better . if you are holding your phone you should not have to do this to help AI see better , you can just point to it ? or better if AI sees that there is a speaker there just conversationally ask yout it about something you want to ask , if you hold your phone from one hand , assuming you have other hand free and are able to use it should be able to point in the direction .

You can see person in the demo have to awkwardly reach out with their thumb and glide it all over the screen and then two clicks to close it ?? One of the primary users for this use case will be visually impaired , Partially sighted and blind so this should be realiable enough that some one can point it to a general direction and with little or no manual intervention we’d be able to ask question about the thing , even if you are trying to fix a thing humans are really good at making up names and trying to describe what they see , This three click situation needs to be better IMO

Project Astra isnt that fast :

So we are competeing with Open AI as much as Sundar wants you to think other wise but the competitor in this case has a better product which works really fast , in their demos the audio latency is nearly instant but the video latency is really good too , they havent talked ahout this in the blog posts but the video has a remartable sub second response time as well , in the demo , Astra seems to wait and think about it a lil longer ,i hope that they can somehow make it faster because when you are using as a conversational AI or with glasses every 100 ms adds up

Model Powering Open AI’s Tech is their best model :

This is how we transition into tallking about the new model they unvieled ,its Gemini Flash and according to benchmarks 8 its just a little stupid then Gemini 1.5 pro Latest latest and that’s about it , where as OpenAI’s conversational and video experience will be powered by their flagship model GPT 4-o So you can expect later to be more intellgent in the real world , im sure google will make their product better but for now it is what it is

Gemini Flash :

So as we just learned this is the model from google powering their all new Astra product , this is promised to be smart but fast model . You can check related benchmarks Here . Gemini Flash has a context length of 1M tokens , i wonder if this may help AI to remember more stuff through out your conversation , but then Open AI can also do that , if they cache your previous messages during conversation .it would be really helpful if they can remmber long conversations , Imagine you have to remind AI every 15 minutes , that’s a big no no

Gemini Flash Pricing :

Gemini 1.5 Flash has a blended price of $0.79 per 1M tokens, with an input token price of $0.70 and output token price of $1.05 9 .

Gemini Flash Pricing :

Gemini 1.5 Flash has a median throughput of 139 tokens per second, making it faster than average.

Its latency (time to first token) is 0.52 seconds

check more indepth tests about Gemini Flash HERE

If you want to know how pricing compare to other models check this article

Veo → Google’s take on Vedio Generators :

After Open AI’ s SORA 10 Google wants everyone to know they have Veo as well , i have some spciy takes on use of these models by actual professional and is this really creative and i will share them in an upcomming article

What will this technology enable :

You have been hired as an Advertising Specialist for a small family-owned-and-operated business. Your role is to enhance their product through captivating visual storytelling. You have written an epic story and devised accompanying visuals, but the budget constraints may hinder your ability to deliver the promised quality. Unfortunately, traveling for a drone shot is not possible, and the licensing fee for good drone footage is exceeding the allotted budget. However, considering the use of AI-generated video content that convincingly emulates the desired visuals might be a viable solution in this case.

If you believe that this technology will replace filmmakers or be featured in high-budget films anytime soon, I must inform you that it is simply not going to happen. Film enthusiasts analyze even the smallest details, such as how a creature weighing 30 times more than a person can bend a branch, or how a puddle next to it accurately reflects its surroundings

You can give this to your Kid and make sure that the cartoons are AI generated so you never have to worry about taking care of your child ever ??

Let it marinate :

Its been more then half a month sicne these annoucements and you gotta let the age news to properly comment on it . so i am now going to share my thoughts about how this whole fiasco played out

Open AI still seems to be compute poor :

No surprises here at all , They have to train the new GPT 5 model which is bigger then ever , i think they just gave people gpt 4 o and gaslit them into thinking that this is the best AI model you can ever use so idiots would listen to them and wont use turbo model as much (bell cuve is real and gpt 4-O post annocements showed that to me really well )

GPT 4 O work really well , except when it wont work , its on top of the leaderboard 11 .Where as people dont seem to like it that much 12

please refer to 13 for a good analysis .

Open AI crowd acted like bunch of degens:

I get it its a female voice bro sounds like Scarlett Johansson , despite of sam altman telling us no trust me its not , But certain people called it reminicent of movie her , they rejoiced and laughed and were happy to have a virtual girl they talk to ??

This is very trash tier behaviour imo and was shown in twitter comments all over . 14

let that sink in future of AI and humanity is in the hands of a person who

lied confidently at multiple ocasions

Hiding information from the board and down playing role of safety considerations

gay asf (NO Problem what so ever , i added this news article as a meme , the headline is hilarious ) 15

Please Dont use my voice for your demos (scarlett probably ) Does it anyways to assert dominanance (gets sued → nothing new for him)

So this person is responsible for leading the frontier company for AI , sounds like some alternate timeline for simulation but sadly this the is timeline that you and me are experiencing right now .

Some Thoughts Related to Future of AI and the mess it is right now :

One of the most important things is reliability in AI, which every AI company is having a hard time delivering right now. Not because they are meant to be used solely for recalling facts, but because they enable tool use and function calling.

Humans are so accustomed to typing 1+1 in a calculator and consistently getting the same result. However, if you use a funky prompt with unusual temperature settings, your LLM might actually convince you that its wrong answer is correct. While this may not be an issue for verifiable facts, it becomes problematic when you allow your LLMs to use tools. This non-deterministic behavior is undesirable as it may trigger unwanted agentic capabilities ffor the actions/ information you might not be able to verify on the spot and you have no control over it. LLMs users often express frustration about the fact that LLMs seem lazy or fail to provide the expected answers. However, in reality, LLMs are not deterministic systems. It's like rolling a dice every time and hoping for the best outcome.

Google was in backlash not too long ago where , Google search generative AI experience told users to eat rocks , add glue to pizza and jump off a bridge , Just the kind of answers you expect from that one troll in the group chats 16

Voice : Primary Modality for AI interaction

Voice plays a pivotal role for future AI agents and assistants, making it crucial to optimize this feature from the outset. Currently, it is surprising to note that AI companies generally overlook this aspect.

This company claims to have emotionally intelligent AI , last time i checked their demo was very clunky and bad maybe its better by the time this article is out which will be in 10 to 20 years

OpenAI stands out as the only company that has demonstrated a genuine commitment to enhancing AI interaction. User experience is crucial in human-machine interaction, especially when machines are intelligent and increasingly relied upon for task completion. In the future, these agents will be seamlessly integrated into spatial computing technology, allowing users to accomplish tasks using voice commands.

User experience (UX) should be carefully planned, tested with users, and iteratively refined. While creating a generic chatbot text area where users can type messages and receive responses from a server may seem like a straightforward approach, it is only suitable for short-term solutions. Companies with substantial UX teams, such as Google, can effectively address UX challenges but smaller companies might not be able to catch up.

https://research.google/blog/towards-a-conversational-agent-that-can-chat-aboutanything/

https://arxiv.org/pdf/2005.14165

https://openai.com/index/chatgpt/

https://www.linkedin.com/posts/tom-alder_the-irony-is-crushing-stack-overflows-activity-7099208596369903616-EQH6

https://openai.com/index/hello-gpt-4o/

https://openai.com/index/chatgpt-can-now-see-hear-and-speak/

https://deepmind.google/technologies/gemini/project-astra/

https://deepmind.google/technologies/gemini/flash/

https://artificialanalysis.ai/models/gemini-1-5-flash/providers#pricing

https://openai.com/index/sora/

https://chat.lmsys.org/

https://www.reddit.com/r/ChatGPT/comments/1cu3s3v/gpt_4o_sucks_in_my_opinion/

https://www.reddit.com/r/ChatGPT/comments/1cy2z3l/gpt4o_separating_reality_from_the_hype/

https://www.reddit.com/r/ChatGPT/comments/1de0hiz/youll_get_bored_of_her_very_quickly/

https://www.advocate.com/technology/chatgpt-ceo-capitol-hill

https://www.bbc.com/news/articles/cd11gzejgz4o